Perceptron

Perceptron is one of the simplest types of artificial neural networks and is the fundamental building block for more complex neural networks. It was invented by Frank Rosenblatt in 1958. A perceptron is designed to classify input data into one of two possible classes.

Key Concepts of Perceptron

1: Input Features: The perceptron takes several binary or continuous inputs (features). Each input is associated with a weight.

2: Weights: These are numerical values that represent the importance of each input feature. Initially, these weights are set randomly.

3: Bias: This is an additional parameter that helps the perceptron model handle datasets that are not perfectly linearly separable. It acts as a threshold for the activation function.

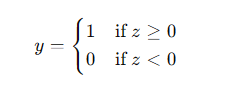

4: Activation Function: The perceptron uses a step function (also known as the Heaviside function) to produce an output. The weighted sum of the inputs is calculated, and if it exceeds a certain threshold (often set to zero), the perceptron outputs a 1 (indicating one class), otherwise it outputs a 0 (indicating the other class).

Mathematical Representation

The perceptron can be mathematically represented as follows:

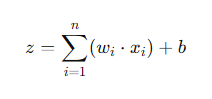

1: Weighted Sum Calculation:

where xi are the input features, wi are the corresponding weights, and b is the bias.

2: Activation Function:

Learning Algorithm

The perceptron learns by adjusting its weights based on the errors in its predictions. This process is known as the perceptron learning rule and involves the following steps:

1: Initialize Weights: Start with random weights and a bias.

2: Calculate Output: For each training example, compute the output using the current weights and bias.

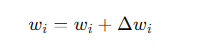

3: Update Weights: If the output is incorrect, adjust the weights and bias to reduce the error.

where

Here, ή is the learning rate,ʸ is the actual label, and ŷ is the predicted label.

4: Repeat: Repeat the process for a number of iterations or until the weights converge.

Example

Here's a simple implementation of a perceptron in Python:

import numpy as np

class Perceptron:

def __init__(self, learning_rate=0.01, n_iterations=1000):

self.learning_rate = learning_rate

self.n_iterations = n_iterations

self.weights = None

self.bias = None

def fit(self, X, y):

n_samples, n_features = X.shape

self.weights = np.zeros(n_features)

self.bias = 0

for _ in range(self.n_iterations):

for idx, x_i in enumerate(X):

linear_output = np.dot(x_i, self.weights) + self.bias

y_predicted = self.activation_function(linear_output)

update = self.learning_rate * (y[idx] - y_predicted)

self.weights += update * x_i

self.bias += update

def predict(self, X):

linear_output = np.dot(X, self.weights) + self.bias

y_predicted = self.activation_function(linear_output)

return y_predicted

def activation_function(self, x):

return np.where(x >= 0, 1, 0)

# Example usage

if __name__ == "__main__":

# AND logic gate

X = np.array([[0, 0], [0, 1], [1, 0], [1, 1]])

y = np.array([0, 0, 0, 1])

p = Perceptron(learning_rate=0.1, n_iterations=10)

p.fit(X, y)

predictions = p.predict(X)

print("Predictions:", predictions)